Image resizer options

Resizing is necessary to make sure every image in the network has the same shape. You can modify the resizer among the preprocessing steps.

It is generally recommanded to keep the default resizing values. However there are cases where you would want your model trained on different sizes, for example:

To adapt to your use-case images if they are smaller/lower quality than your training data

Mitigating regions with unusual aspect ratios (see Keep Aspect Ratio resizer)

When experimenting with resizing, in order to see meaningful results, you need to multiply or divide the scales significantly (e.g x2 or x4).

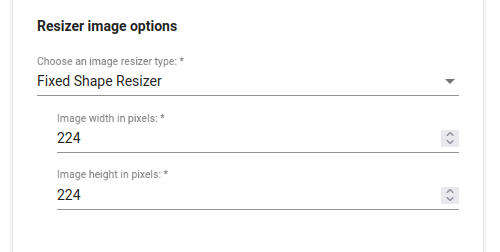

Fixed sized resizer

Here you can set manually the height and width of your images. We enforce an increment of 16 pixels to comply with some architectures convolution constraints (YOLO). Height and width don't need to be equal.

This is the recommended option when your image sizes are not extreme (approximately square or 16:9). You generally don't have to worry about slightly distorting your images, even if they lose their "natural" aspect, the network will learn the distorted way, and the performances will be the same. Variance in aspects can even bring some "augmentation" to your data.

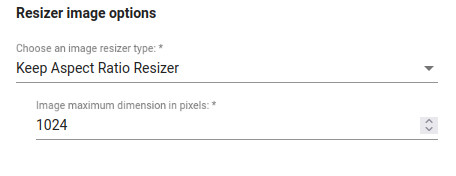

Keep Aspect Ratio resizer

This resizer will resize all images to the same size, but it's keeping the original aspect ratio by padding with black pixels. Note that here we are talking about minimum/maximum dimensions, not height/width. The same scaling ratio is applied to both dimensions, such as to match the maximum dimension parameter.

Note that the minimum dimension in pixels parameter is not available in Studio, and it is 1024 by default. You should not set a maximum dimension value lower than 1024, otherwise the preprocessing will crash.

The output size can be described by two cases:

If the image can be rescaled so its minimum dimension is equal to the provided value without the other dimension exceeding max_dimension, then do so.

Otherwise, resize so the largest dimension is equal to max_dimension.

Examples

Example 1: 600x600 image. min_dimension is 600. By scaling it to 1024, the max_dimension will also be 1024.

Example 2: 600x900 image. min_dimension is 600. By applying the ratio 900*(1024/600), we get a max_dimension value of 1536.

If you set a max_dimension value lower than that (e.g 1024), we fall into the case [2]. So the image is resized to max_dimension=1024, and min_dimension is scaled accordingly: 600*(1024/900).

If you set a higher max_dimension (e.g 2048), we fall into the first case. min_dimension will be scaled up to 1024, and max_dimension 1536.

The "Keep Aspect Ratio" resizer can be useful when the original images/regions have unusual ratios.

Note that in child view under a detection view, the "images" would actually be the parent regions. Since they are originally bounding boxes matching the objects, these regions could be highly distorted if homogenically resized.

Was this helpful?