December 13, 2023

NEW FEATURES 🚀

Integrate Deepomatic camera into your field app with the Camera Connector

The Camera Connector has been designed to redirect field users at the camera level in the Deepomatic application. With the Camera Connector, you can supercharge your field application with a seamless experience of photo capture and AI feedbacks.

For more details regarding the integration, please check the technical documentation.

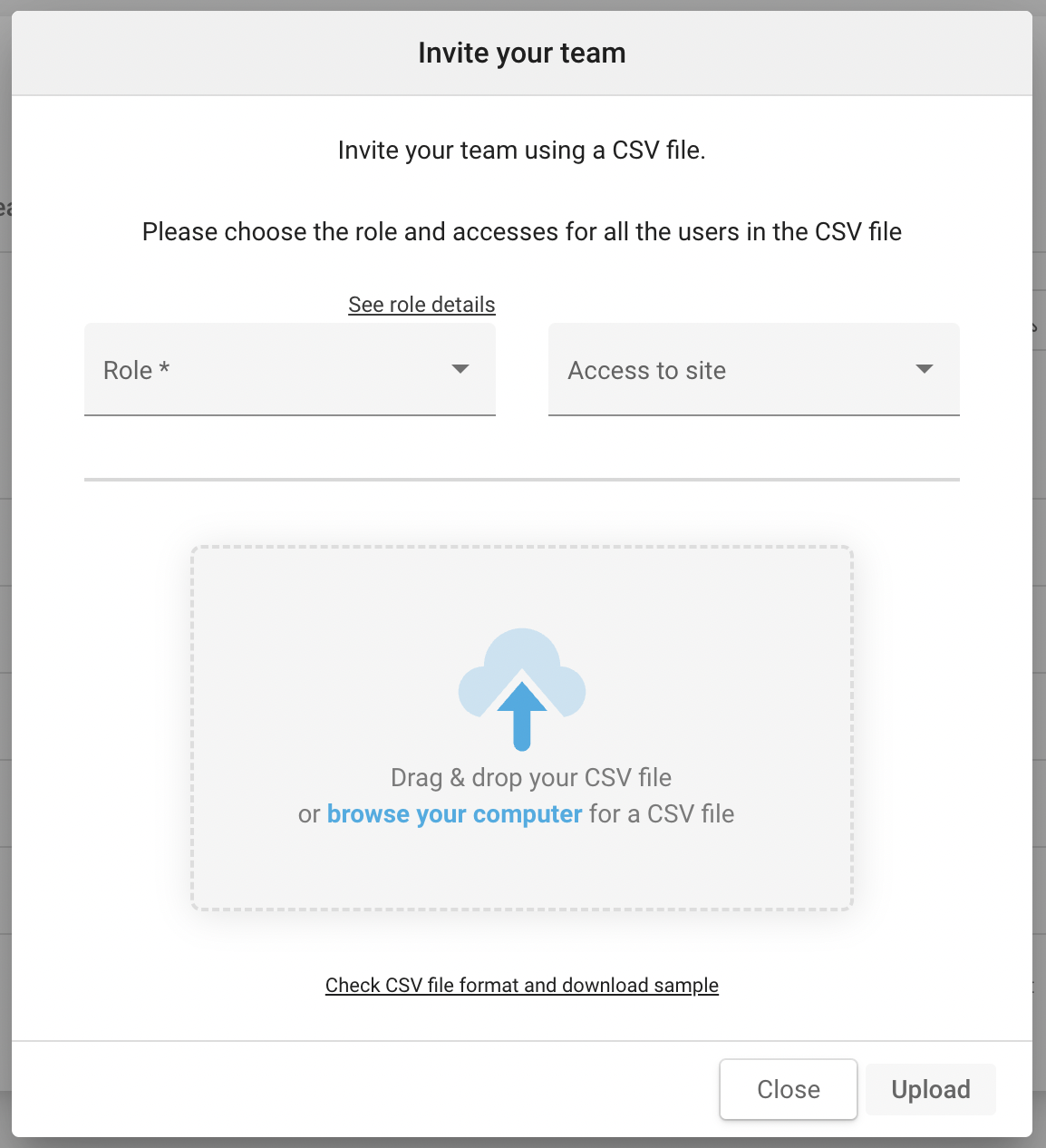

Invite a group of users into the Deepomatic studio

You can now invite a group of users into the deepomatic studio at once and assign them a permission role and mobile app access using this new functionality in the 'My organization' page.

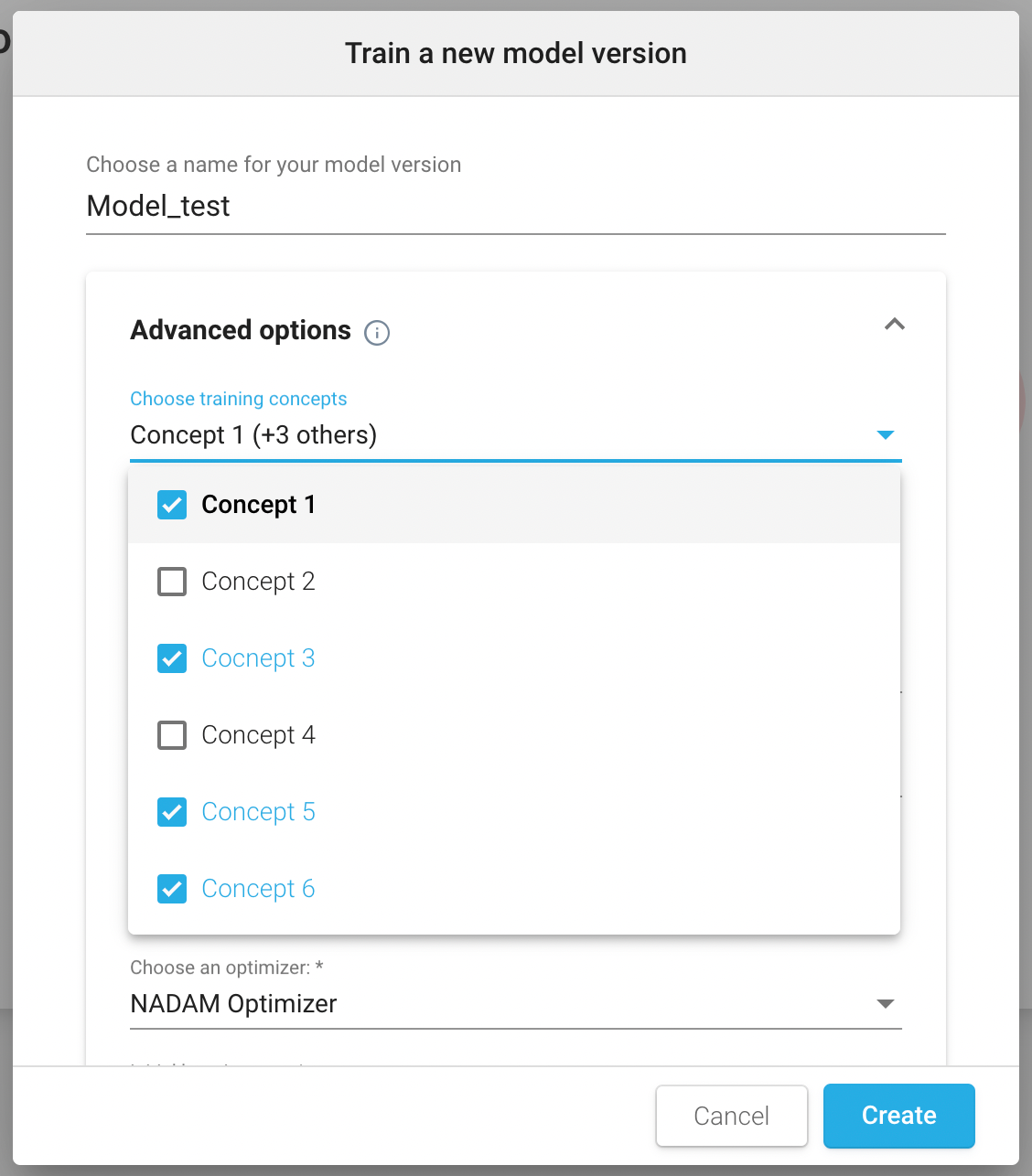

Select which concepts to train on inside a view

This feature allows you to select the concepts on which you want to train models inside the views and disregard the others by treating the associated images as having no concept. You can access this capability in the training advanced options:

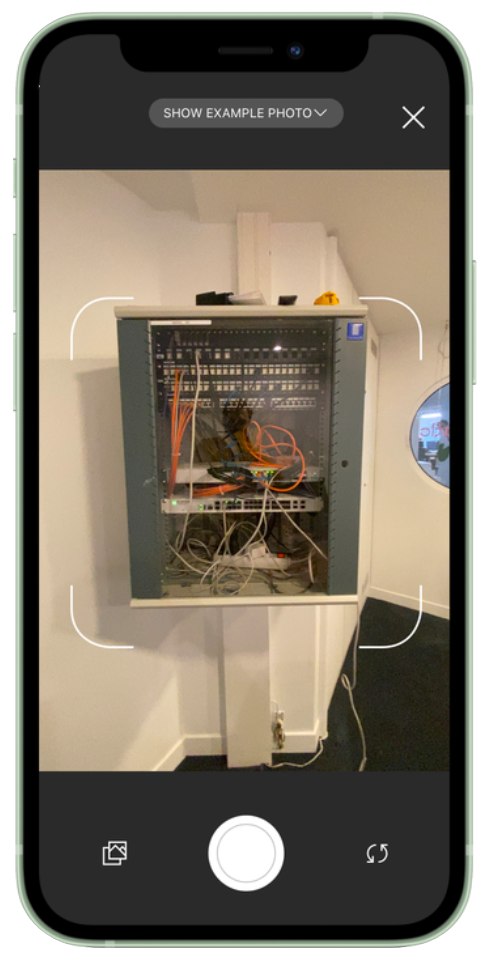

Visual guide to improve photo framing

Good photo framing is both essential to ensure thorough reporting and high AI performances. The objective of the visual guide is therefore to provide guidance to the field users. The aim of the visual guide is to help field users frame their photos so that what needs to be analysed (e.g. a specific equipment) is fully visible.

Identify job defects within the Operational Review tab

With the Operational Review, you can highlight job defects that are identified by the AI models. Based on your configuration and the AI analysis, a list of job defects will be asynchronously generated. Depending on your configuration, there can be several defects per work order.

Once you are on the job defects page, you will be able to :

Either validate or dismiss the defect.

Generate a PDF that summarises the validated defects. A message can be added to this PDF.

At the PDF generation, a message can be added.

IMPROVEMENTS 👍

In the take photo interface, it is now possible to consult example photos and take the photo at the same time.

Field users are now notified when there is a Deepomatic error during the analysis and that the analysis cannot be carried out. They have the choice to retake another photo or to get back to the work order page.

It is now possible to push comments even though no reason has been configured. In that case, there will be a default reason "Comment".

A new version of the blur algorithm is now available. Performance has been improved and this version is no longer threshold-dependent, unlike its predecessor. The previous version will still be maintained.

The readability of the AI results at the task level have been improved in the work order and asset pages.

![]()

It is now possible to zoom on the images in Collections.

FIXES 🔧

For the POST work order endpoint, the metadata field is now only accepting key-value objects. If another type is passed (eg. string), a 400 error will be returned.

The link to shared models in the app version has been fixed and routed to the correct models

The problem with searching for users on my organization's page has been fixed

Predictions threshold slider has been fixed

Task group names were displayed instead of display names. The issue has been fixed and display names are now displayed.

All bounding boxes are now displayed when zooming on an image.

Bounding boxes now follow the rotation of the image both when it is zoomed out and zoomed in.

The chosen image rotation is now conserved after zooming on an image.

The issue regarding the correction of analysis not saved has been fixed.

Last updated

Was this helpful?