Training options

Last updated

Was this helpful?

Last updated

Was this helpful?

The three key training parameters are the number of iterations, initial learning rate, and neural network architecture.

For the neural network architecture selection, you first need to understand how neural networks work:

and then decide on the best option for your problem:

The number of iterations is the number of passes (one pass corresponding to the forward of some images into the neural network and the backpropagation of the error in the neural layers) through the neural network.

For each pass, the number of images that will be used is defined by the batch size. It can be found on the page Available architectures.

One epoch corresponds to the number of iterations that are necessary to go through all the images in a training set.

We no longer allow initiating the training with the iteration number hyperparameter; instead, we now launch training with the specified epoch number.

A good value for the number of epochs is between 6 and 15

The relationship between the number of iterations and the number of epochs is the following:

Number of iterations per epoch = number of training images/ batch size

The optimizer is the algorithm technically responsible for training the model, in other words, the rule that is followed to update the parameters of the model in order to improve its performance.

In the literature, we can encounter different algorithms that use mainly the gradient of the loss as a rule to update the parameters.

In the platform we have set by default, for each architecture, a given optimizer with a given learning rate, which were a result of a benchmark campain.

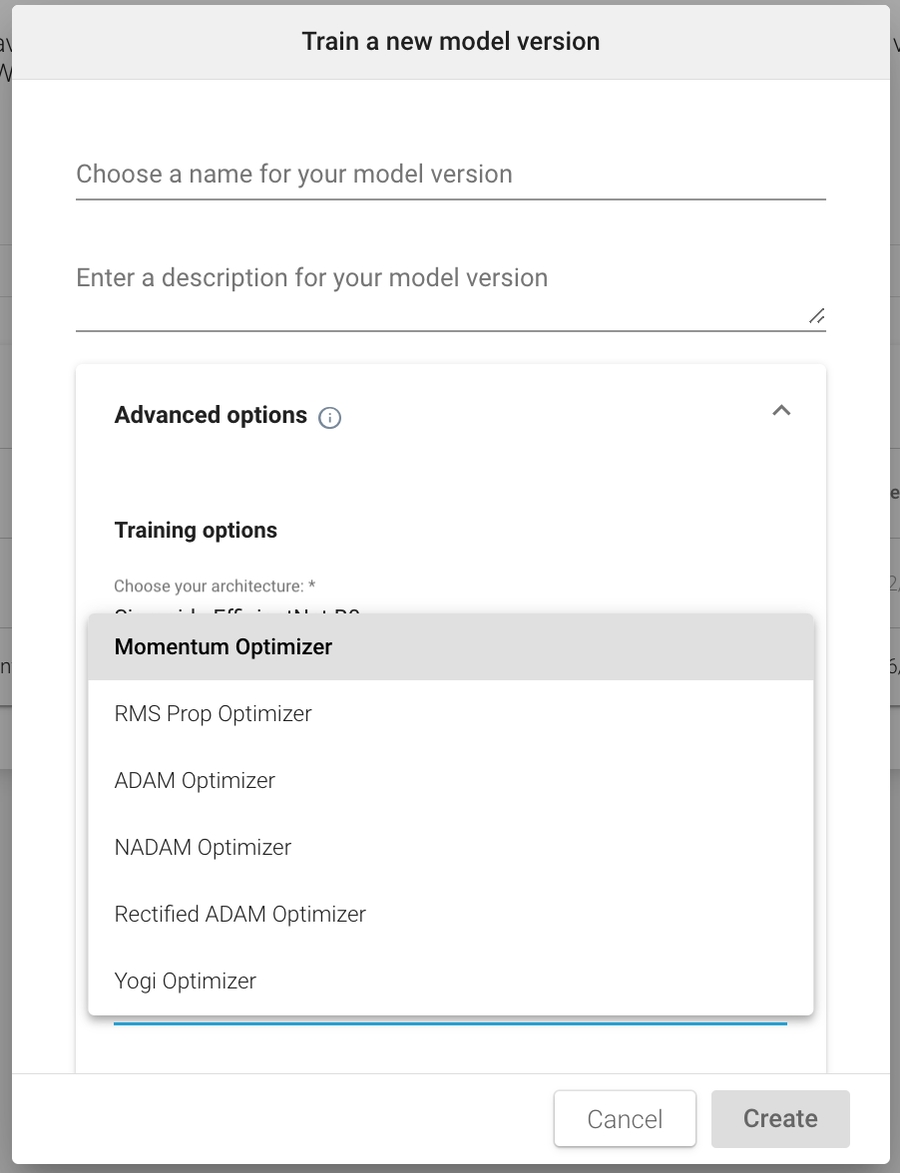

In the case of Classification and Tagging, you have a choice between the following optimizers :

Momentum (SGD)

Nadam

Adam

Rectified Adam

YOGI

RMS Prop

If you change the optimizer (in the case of Classification and Tagging) the value of the learning rate changes automatically. This value is a recommended value, but you can change it if you want to experiment

For Object Detection Tasks, changing the optimizer will not modify the learning rate. We recommend you use the default optimizer per architecture, as well as its default learning rate