Data Augmentation

Here we describe the data augmentation techniques that you can use during your trainings.

By applying modifications to the images in your dataset, data augmentation artificially widens the scope of your data domain search. It can be an excellent strategy to boost your performances to add more variation to your dataset by making new images from the existing ones in it.

Each operation has a probability factor attached, thus when a certain image is used, there is an x% chance that the activated technique will be employed. This occurs each time an image is extracted from your dataset, so different transformations of the same image may occur over different epochs (or not transformed).

All augmentations can be used

You can add them only once

The order in which you add them can matter

For YOLO architectures, data augmentation is not available

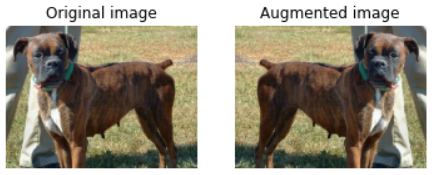

Horizontal flip

The image is flipped horizontally randomly with 50% chance, meaning it is mirrored on a X-centered axis.

If you are trying to detect right or left-sided objects, this one augmentation is of course highly discouraged.

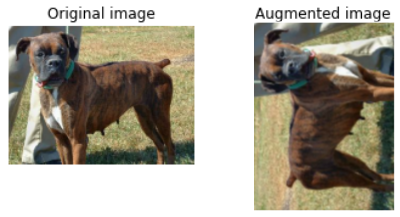

Vertical flip

The image is flipped vertically randomly with 50% chance, it is mirrored on a Y-centered axis.

It is different from a 180° rotation

90° rotation

Randomly rotates the image by 90° counter-clockwise with 50% chance. Combine it with 'Random Horizontal Flip' and 'Random Vertical Flip' to get rotations and symetries in all directions.

Modify brightness

Randomly changes image brightness with a 20% chance. This modifies the image by adding a single random number uniformly sampled from [-max_delta, max_delta] to all pixel RGB channels. Image outputs will be saturated between 0 and 1.

The max_delta parameter indicates the range up to which your image could get brighter or dimmer.

This means that a value of 0 will not dim the image, but rather prevent any change.

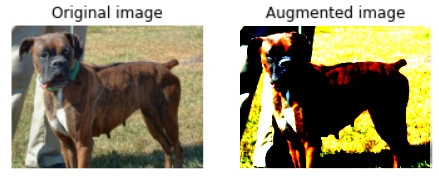

Modify contrast

Randomly scales contrast by a value between [min_delta, max_delta]. For each RGB channel, this operation computes the mean of the image pixels in the channel and then adjusts each component x of each pixel to '(x - mean) * contrast_factor + mean' with a single 'contrast_factor' uniformly sampled from [min_delta, max_delta] for the whole image.

This operation has not a probability factor, when activated it is applied every time

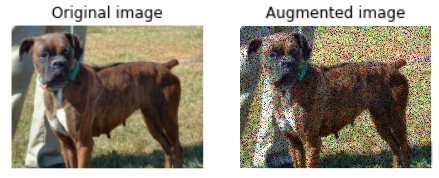

Add gaussian noise

Randomly modify a patch of the image by adding gaussian noise to the image pixels normalized between 0 and 1.

This operation has not a probability factor, when activated it is applied every time

AutoAugment

AutoAugment is an algorithm which learns the best augmentations to apply during the training. It is a mix of random image translations, color histogram equilizations, "graying" patches of the image, sharpness adjustments, image shearing, image rotating and color balance adjustments.

You can find the original paper explaining the technique here: https://arxiv.org/abs/1805.09501

The original paper proposes several "policies" which are different combinations of transformations. Based on our own research, we use the v3 policy which had the best results.

AutoAugment is a method to find the best augmentations to apply during the training, that was published in this 2018 paper "AutoAugment: Learning Augmentation Policies from Data". It is a mix of random image translations, color histogram equilizations, "graying" patches of the image, sharpness adjustments, image shearing, image rotating and color balance adjustments.

This original paper proposes an ideal policy (~ series of transformations) that performed best on ImageNet in their benchmarks, in a classification task. It is the one called "Original AutoAugment policy" when you select AutoAugment as your preprocessing.

Another paper did a similar work in a detection setting, and released several policies which they found worked best while training on the COCO dataset. We selected the v1 and v3 versions, which you can select when working in a detection view, under the following, more descriptive names:

v1: "More color & BBoxes vertical translate transformations"

v3: "More contrast & vertical translate transformations"

We found that AutoAugment had better outcomes with detection tasks, and/or smaller architectures such as MobileNet or ResNet-50.

You may want to disable other data augmentations to avoid interfering.

This operation has not a probability factor, when activated it is applied every time

Last updated

Was this helpful?