Spotting and Correcting Annotation Errors

Having datasets that are as clean as possible is crucial to optimizing the performance achieved by your models. A clean dataset is a dataset for which there are no annotation errors. When working on datasets of tens of thousands or even millions of images, it is very complicated in practice to achieve this. Indeed, annotating images with bounding boxes and with visual concepts is a tedious task, and like all painful and repetitive tasks, we humans make mistakes when we do them on large volumes. On the Deepomatic platform, you can run audits on your annotations that will display images for which there are potential errors.

Launching an Audit

On the navigation bar on the left side of the platform, click on the Correct annotations button to access the Error Gallery page. By following this link, you access a Gallery page where you visualize some potential errors, but also where you can launch an audit.

When launching an audit, an automatic review of all your annotations is performed and the Error Gallery is populated with all potential errors that need review.

Review an Annotation Error

No matter what type of view you are working on, you can review an annotation by clicking on an image in the error gallery, or by clicking on Correct errors at the top of the page to use the Multi-User mode.

Types of Errors

There are two types of errors that are displayed in separate tabs in the Error Gallery:

Missing annotation

Classification and Tagging: a missing annotation corresponds to an image that has been negatively annotated with a given concept when it should have been positively annotated with the concept in question. For tagging views, all concepts are independent, so an error only has an influence on a given concept. In classification, on the other hand, correcting the annotation of one concept necessarily impacts the other concepts.

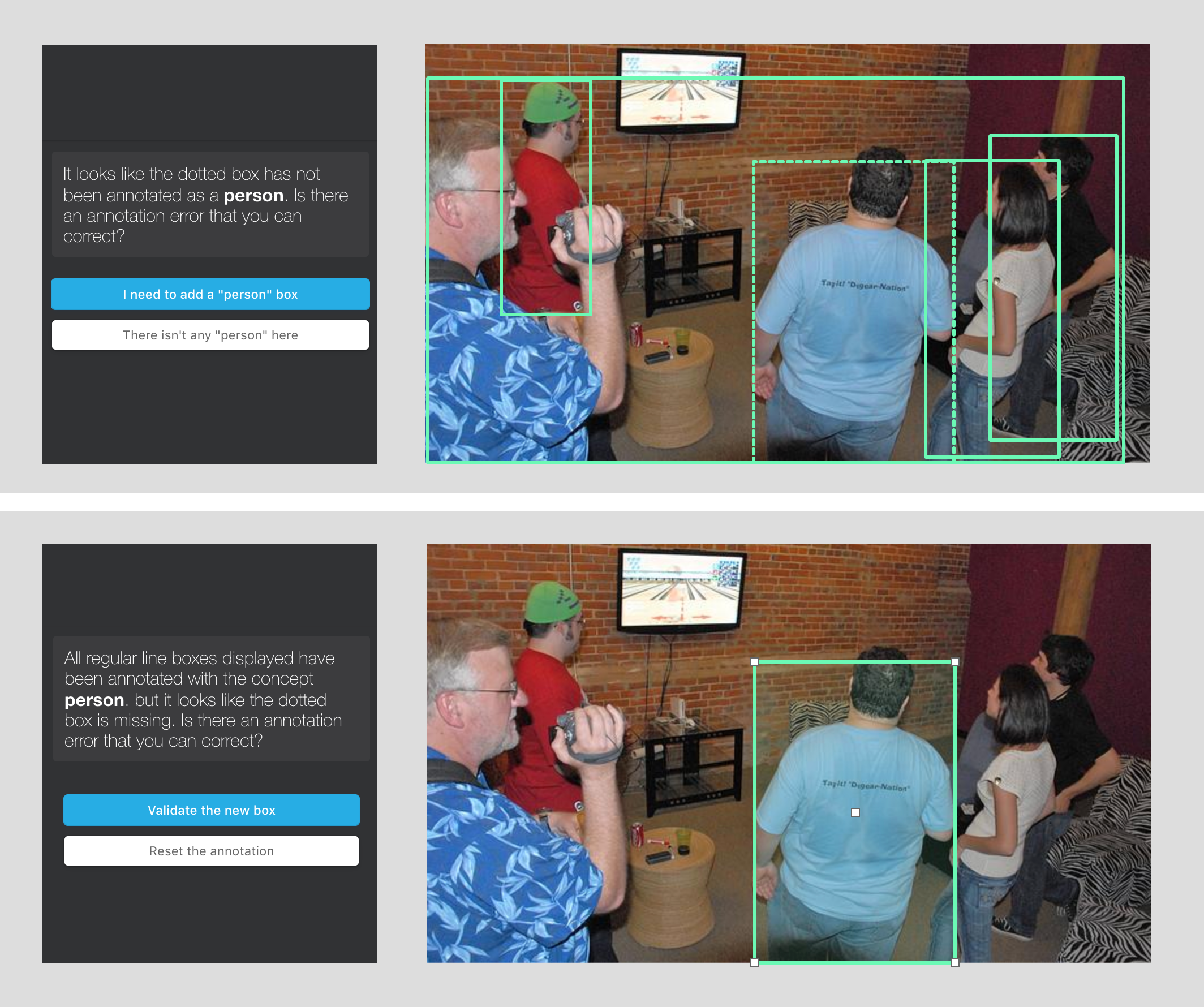

Detection: a missing annotation corresponds to a box that has been forgotten. Correcting an annotation is then about deciding whether the proposed region of the image should be annotated with a box of a given concept or not, and not about the whole image annotation, and adding or removing boxes for all concepts.

Extra annotation

Classification and Tagging: an extra annotation corresponds to an image that has been positively annotated with a given concept when it should have been negatively annotated with the concept in question.

Detection: an extra annotation corresponds to a box that has been added in excess. Correcting an annotation is then about deciding whether the corresponding box should be removed or not.

Review Process

If you edit the annotation for an image outside the error correction section, then all errors relating to that image are deleted. This may explain why the number of audit-related errors may decrease without any action on your part in the error correction section.

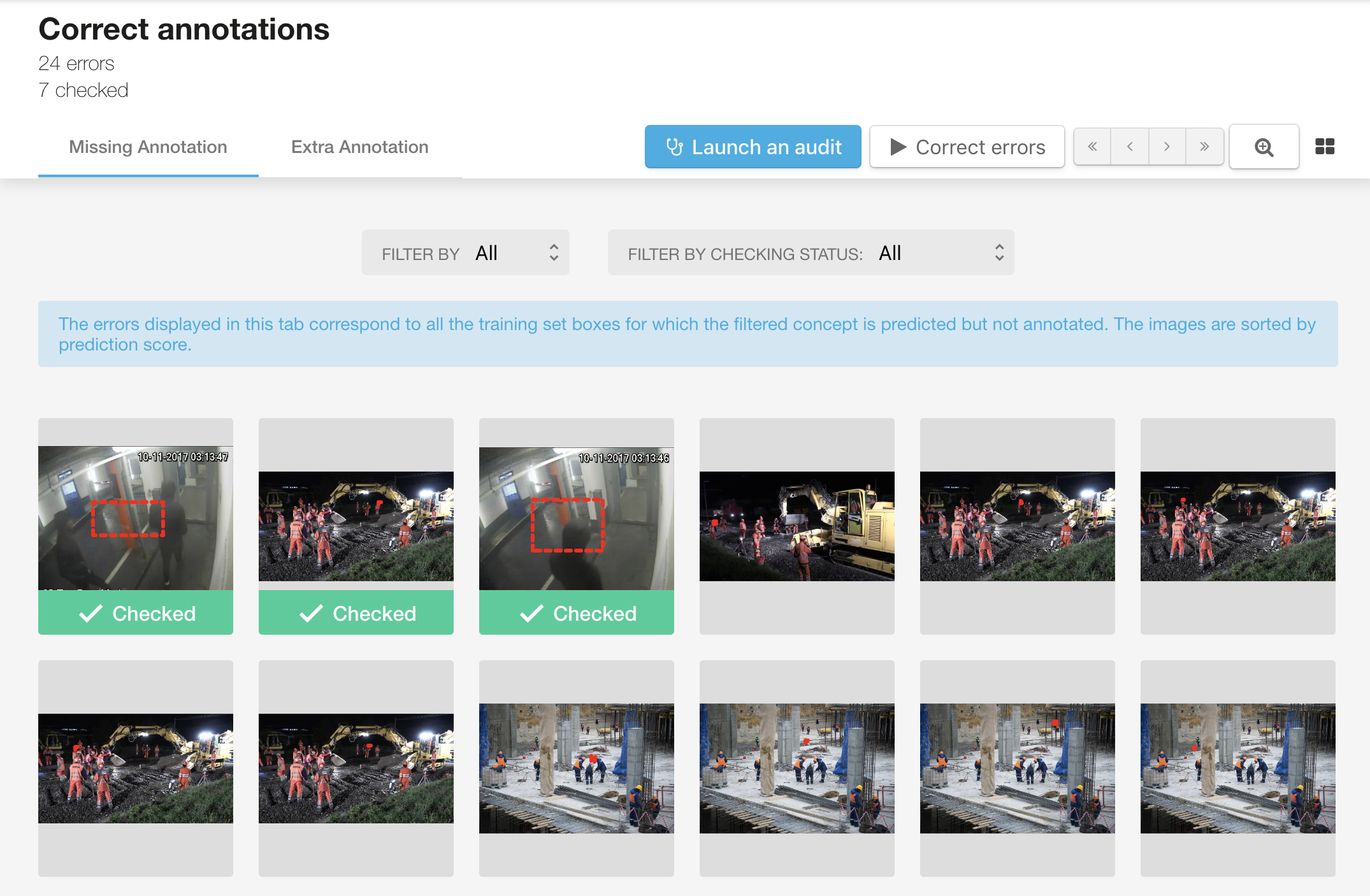

Checked Errors

At the end of each audit, the annotation errors to be reviewed will be displayed in the error gallery, through the two tabs presented above. In this gallery, you have the possibility to filter the concept you want to work on.

Clicking on an error takes you to a page where you can visualize the corresponding error. You then have two choices:

correct the error and thus edit the annotation (by changing a specific concept, or by adding / deleting a box)

validate the annotation as it is, which means without any modification.

In any case, at the end of this review, the corresponding annotation error will be marked as checked, thus allowing you to clearly differentiate at any time between errors already processed and those still to be reviewed. You can use the filter at the top of the page to display only errors that have not been reviewed yet.

You may have the impression that some errors are duplicated in the gallery. This is not the case, but it actually corresponds to the presence of several errors within the same image.

History of Errors

Another feature that is native to the platform is the storage of error and review history. Thus each action (correction or validation of an annotation) is stored so that the same errors are not displayed at each audit.

Let's imagine, for example, that an audit generates a "missing annotation" error corresponding to a missing box. A user reviews this error and confirms the annotation, so does not add a box at the time of his review. By restarting a new audit, we guarantee that we will not display a similar error for the same box because we consider that it has already been reviewed.

To know more about audits and error surfacing, read the following section.

Inside the Error Spotting EngineWas this helpful?