Site commands

Site commands enable you to interact directly with the deployed Deepomatic sites from your terminal. Here is the list of actions that you can do:

create / delete

manifest

install / uninstall

show

current (start, stop, logs, status)

list / save / load

update / upgrade / rollback

intervention

model (infer, draw, blur)

Create / Delete

To create a site, you need to use the following command and to specify a name and the application version that you want to deploy on your site. You can then change this application version.

deepo site create -n my-super-site -v app_version_idTo delete a site, you need to use the following command and to specify the Site Id that you want to delete.

deepo site delete -s site_idManifest

To obtain the manifest necessary for the installation of your site, you must use the following command, specifying the Site Id and the type of manifest that you want to fit with your deployment.

The target manifest are:

gke: Google Kubernetes Engine

docker-compose

Install / Uninstall

Run locally the following command. The site will be installed using the latest docker-compose / kubernetes manifest downloaded from the API.

Uninstalling a site removes all versions and files from a site.

Show

The show command displays the currently deployed site's id, and the id of the currently installed application.

Current

The current command lets you use the following submenu for the currently deployed site:

List / Save / Load

The list command returns the ids of the sites that have been installed.

The save command creates an archive that contains the given site, which can been installed on another server (e.g. offline)

The load command loads a site from an archive generated by a save command.

Upgrade / Rollback

The upgrade command install a new version of the site (using the latest docker-compose/kubernetes manifest from the api)

The rollback command reloads the nth previous version (upgrade followed by rollback 1 does nothing)

Intervention

The intervention command lets you interact with the customer API. There must then be a customer-api service in your application to work. You can then use the create / delete / infer / status commands.

Model

When you deploy an application version on a site, it is possible to run inferences on the model version included in the application version.

Get your Model Version ID and your Routing Key

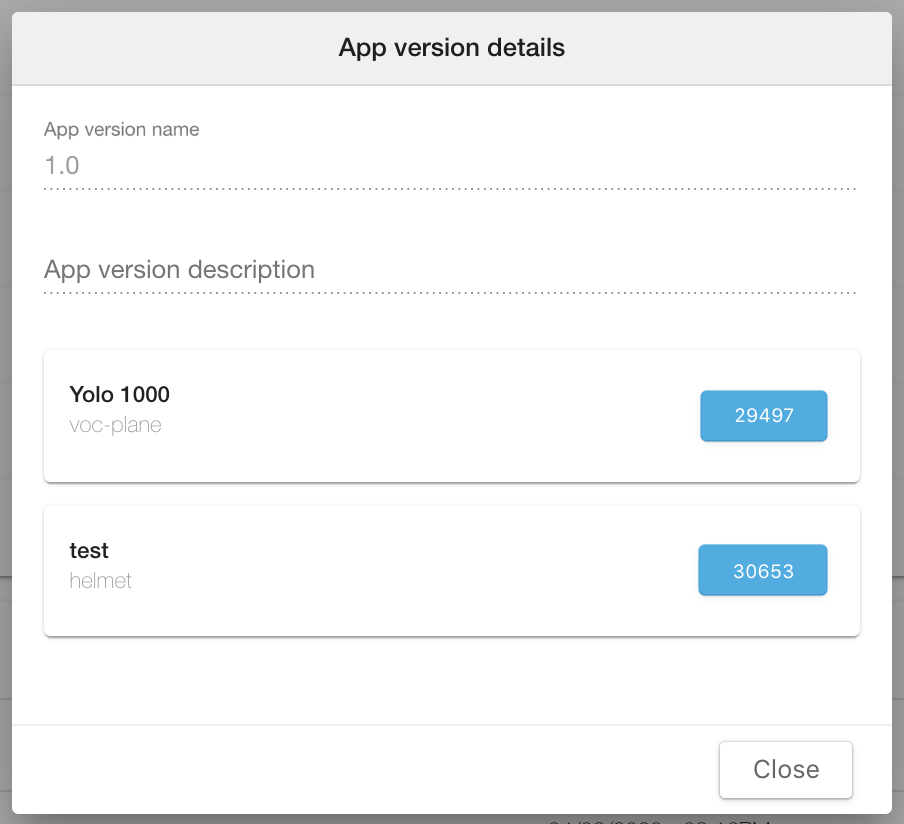

To retrieve the MODEL_VERSION_ID of the model version that you want to use within your application and the corresponding Routing Key, you need to go on the Apps tab in the Deployment section and click on the app version that is deployed on your site.

The MODEL_VERSION_ID is specified with the -r or --recognition_id argument.

The ROUTING_KEY corresponds to the rabbitMQ queue identifier.

You will need to provide one additional parameters to run model actions: the AMPQ_URL. It corresponds to the rabbitMQ address. You will find this address in your server deployment files. It should look like amqp://user:pwd@ip:port/vhost

This parameter will be defined during the GPU server setup. Besides, you don't need to specify any credentials.

Sample commands

Model actions

There are four different model actions:

infer: Compute predictions only.draw: Display the prediction result, whether tags or the bounding boxes.blur: Blur the bounding boxes.noop: Benchmark input and output processing capabilities.

They follow the same recipe:

Retrieve one or several inputs.

Compute predictions using the trained neural network

Output the result in different formats: image, video, JSON, stream, etc.

Input

Input types

The Deepomatic CLI supports different types of input:

Image: Supported formats include

bmp,jpeg,jpg,jpe,png,tifandtiff.Video: Supported formats include

avi,mp4,webmandmjpg.Studio JSON: Deepomatic Studio JSON format, used to specify several images or videos.

Directory: Analyse all images and videos found in the directory.

Digit: Retrieve the stream from the corresponding device. For instance, 0 for the installed webcam.

Network stream: Supported network streams include

rtsp,httpandhttps.

Specify input

Inputs are specified using the -i for input option. Below an example with each type of inputs.

Output

Output types

The Deepomatic CLI supports different types of output:

Image: Supported formats include

bmp,jpeg,jpg,jpe,png,tifandtiff.Video: Supported formats include

aviandmp4.Run JSON: Deepomatic Run JSON format for raw predictions.

Studio JSON: Deepomatic Studio JSON format for Studio-like prediction scores. This is specified using the

-sor--studio_formatoption.Integer wildcard JSON: A standard Run/Studio JSON, except that the name contains the frame number. For instance

-o frame %03d.jsonwill outputframe001.json,frame002.json, ...String wildcard JSON: Same as the integer wildcard except this time the frame name is used. For instance

-o pred_%s.jpgwill outputpred_img1_123.json,pred_img2_123.json, ...Standard output: On rare cases you might want to output the model results directly to the process standard output using the

stdoutoption. For instance this allows you to stream directly to vlc.Display output: Opens a window and displays the result. Quit with

q.

Specify output

Output are specified using the -o for output option. Below an example with each type of inputs.

Please note that in order to avoid duplicate computations, it is possible to specify several outputs at the same time, for instance to blur an image and store the predictions.

Options

Commands have additional options that you can use with a flag. There is a short flag -f and a long flag --flag. Note that one use a simple - while the other uses two --. Also some options need an additional argument. Find below the option table. When indicated, all means that all four commands infer, draw , blur and noop are concerned.

Short

Long

Commands

Description

i

input

all

Input consummed.

input_fps

all

Input FPS used for video extraction.

skip_frame

all

Number of frames to skip inbetween two frames.

R

recursive

all

Recursive directory search.

o

output

all

Outputs produced.

output_fps

all

Output FPS used for video reconstruction.

s

studio_format

infer draw blur

Convert from Run to Studio format.

F

fullscren

draw blur noop

Fullscreen if window output.

from_file

draw blur

Use prediction from precomputed JSON.

r

recognition_id

infer draw blur

Neural network recognition version ID.

t

threshold

infer draw blur

Threshold for predictions.

u

ampq_url

infer draw blur

AMQP url for on-premises deployments.

k

routing_key

infer draw blur

Recognition routing key for on-premises deployments.

S

draw_score

draw

Overlay prediction score.

no_draw_scores

draw

Do not overlay prediction score.

L

draw_labels

draw

Overlay the prediction label.

no_draw_labels

draw

Do not overlay the prediction label.

M

blur_method

blur

Blur method,pixel, gaussian or black.

B

blur_strengh

blur

Blur strength.

verbose

all

Increase output verbosity.

Was this helpful?