Performance report

By clicking on a given model version in the Models Library page, you access a performance report for your model. Here is an overview of the information you will find:

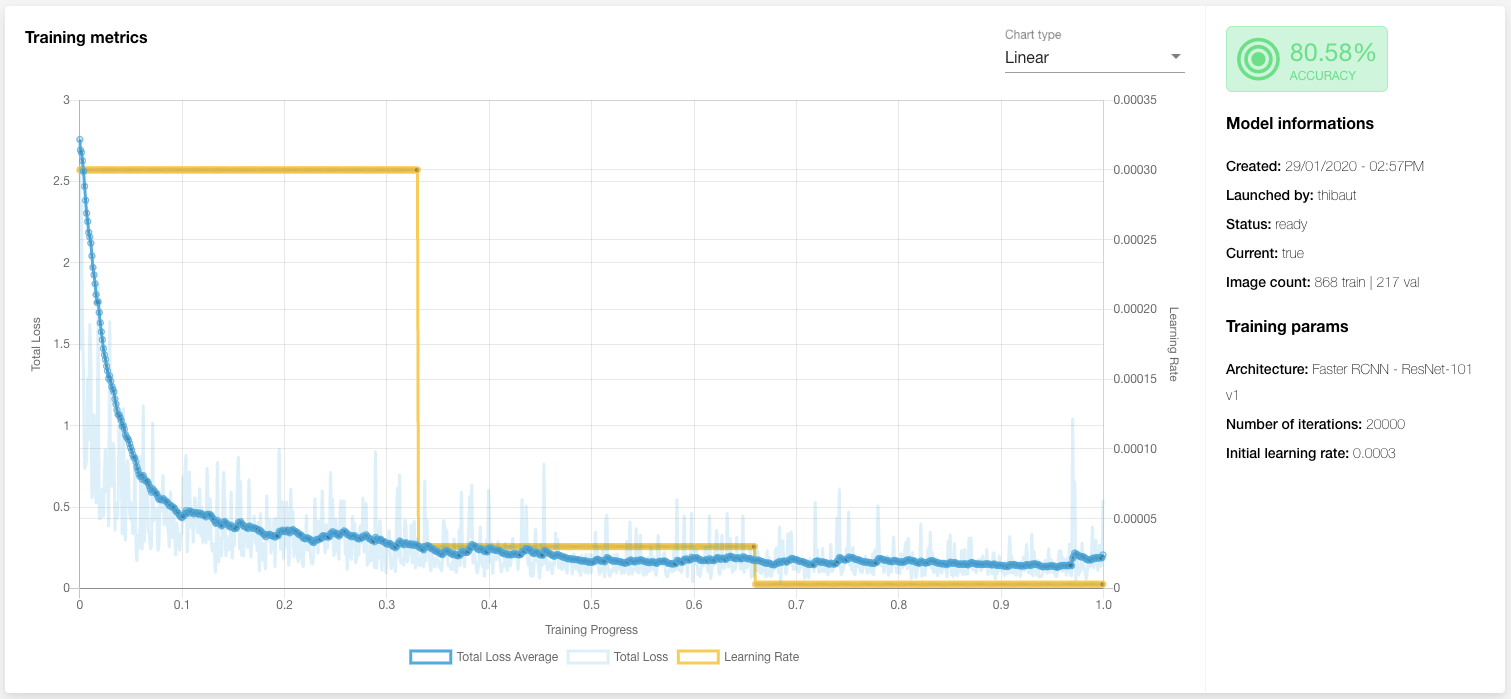

Training metrics, such as the loss and learning rate depending on the training progress (left hand side), as well as details about the architecture and other training parameters (right hand side)

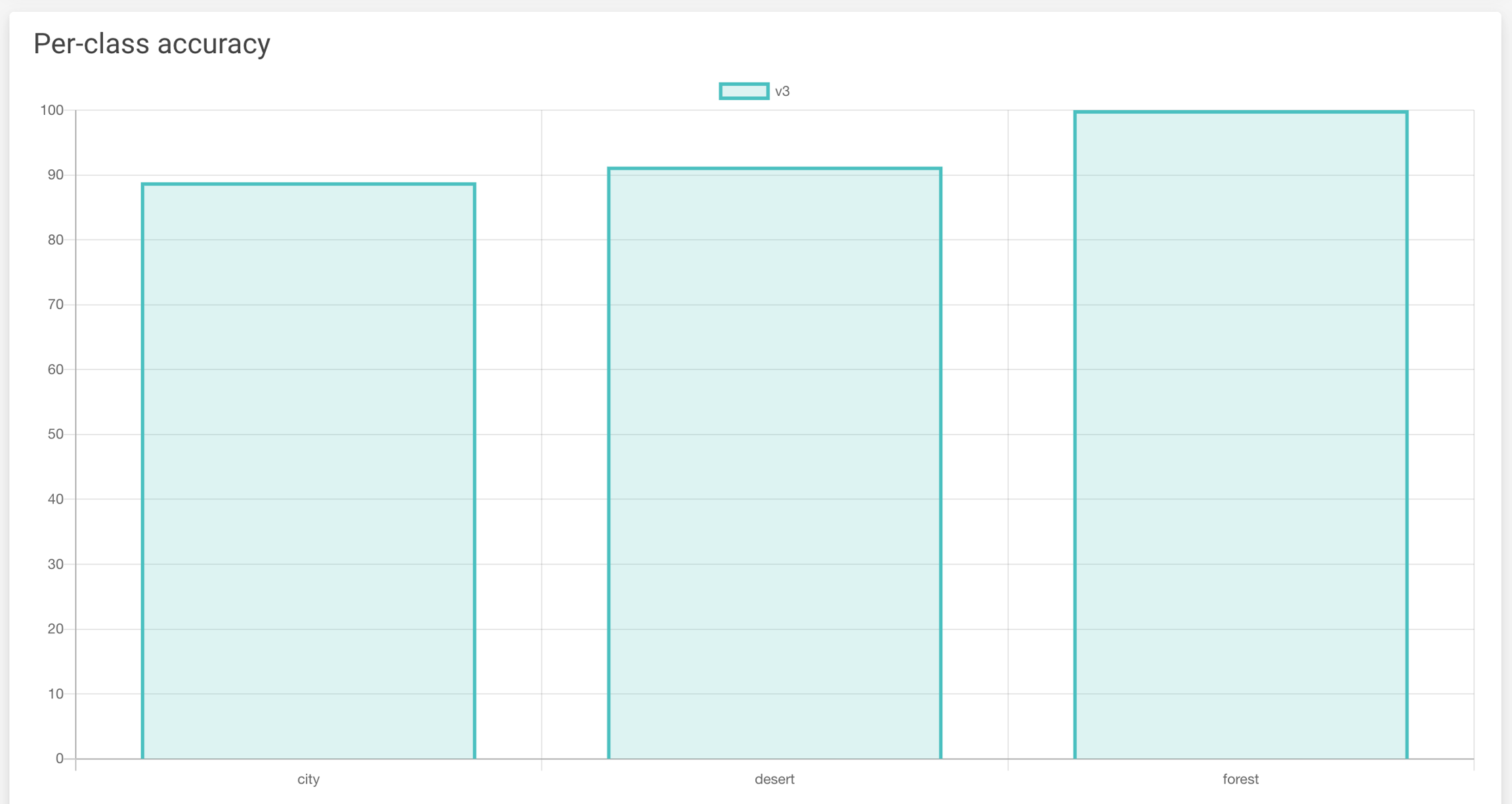

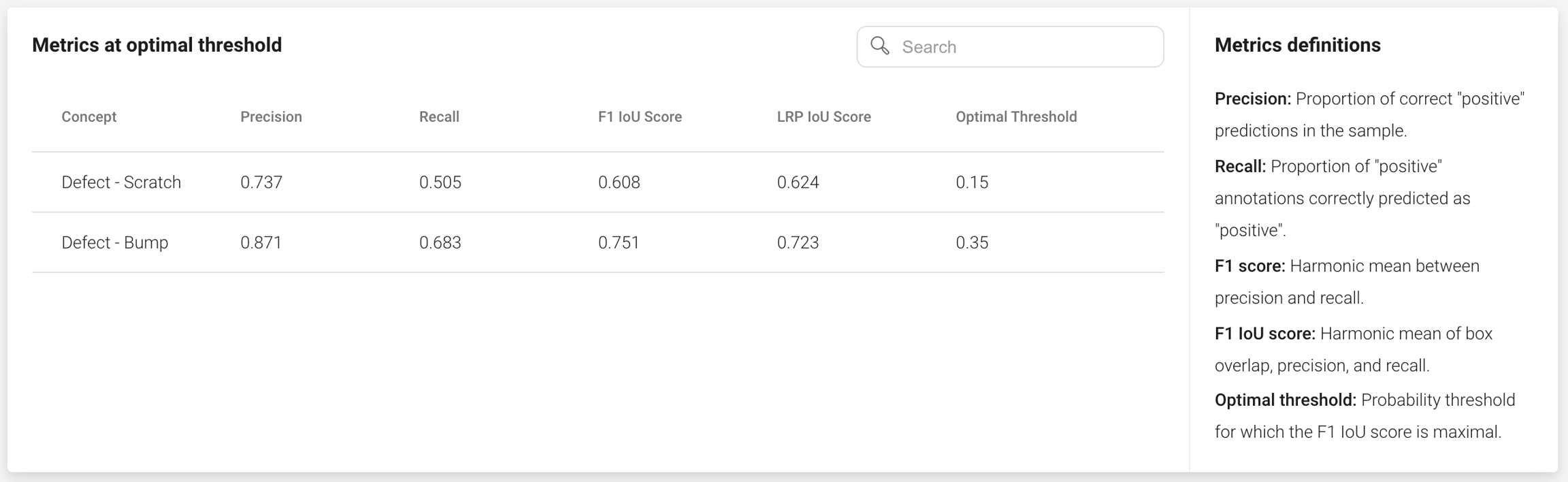

A table summarizing the accuracy of each label (for classification and tagging views) or precision, recall, F1 IoU score and LRP IoU Score for all concepts at the optimal threshold computed (for detection views):

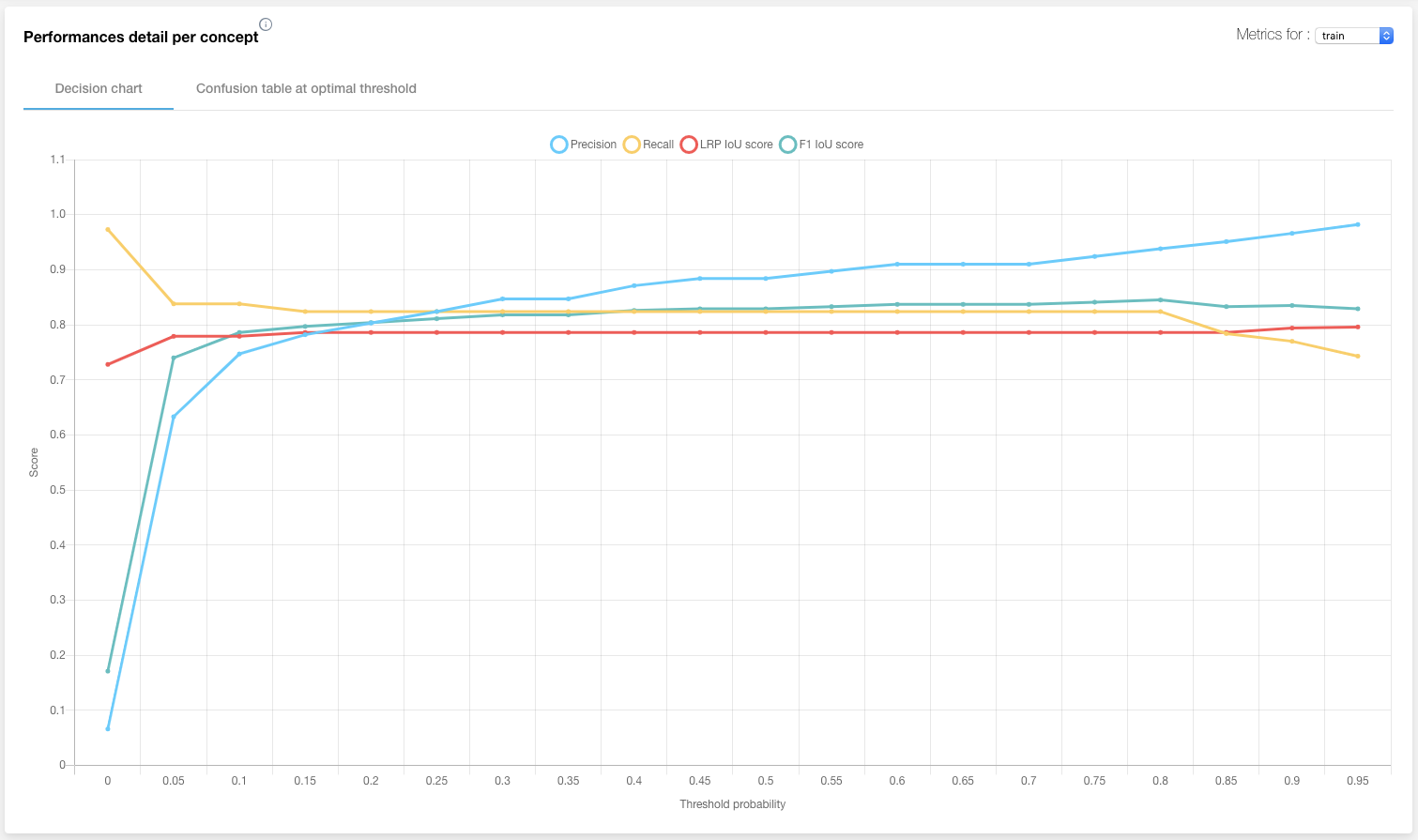

For detection, you will get precision, recall, F1 IoU score and LRP IoU Score for different levels of thresholds for any concept selected in the top right corner:

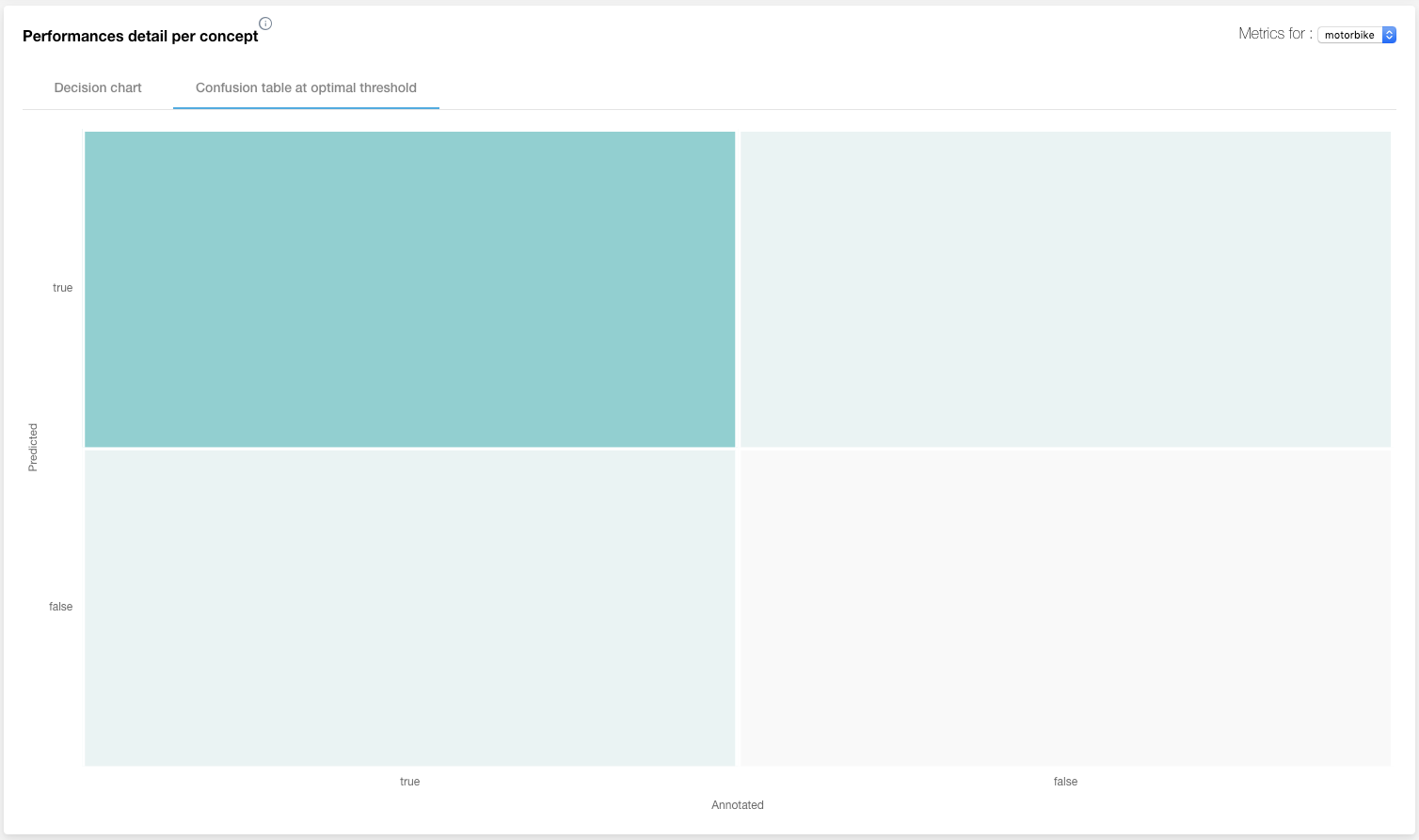

For each concept, you will also get a confusion matrix computed at the optimal threshold:

For more information on the metrics used to evaluate models, you can refer to the page Metrics explained: detection, or our Guidebook on how to build your custom AI.

Was this helpful?